Microsoft has achieved something that they did not before, ever since Bing started in June 2009: people actually want to use Bing. So did I, although I had just given up on Bing after using it for a few years. It was getting soooo annoying with pushing MSN content on the homepage. In a way, it still is.

I was expecting this banner to go away after I completed the advertorial, but it does not. Every time you open Bing, it flies in your face again:

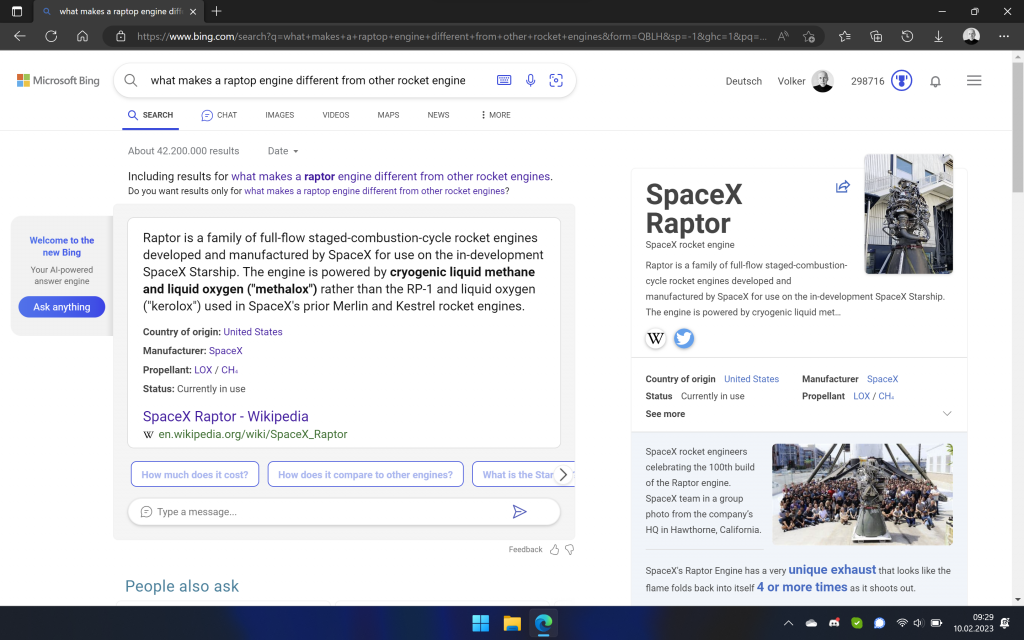

Let’s ask Bing a question: “what is the difference between a raptop engine and a regular rocket engine”. I misspelled Raptor as raptop, but I left the typo in anyway. You get this search engine result, as you might expect it. It is returned in a split second:

On the top of the page, next to search, is a link to Chat, and this is where Bing starts a conversation. If you came from a normal search query, it just pulls your last question over as the start of the conversation.

Now things slow down. Bing is running a LLM (large language model), which takes a tremendous number of resources. This is expensive! And it may be the reason why it does not go into this mode without you asking for a chat.

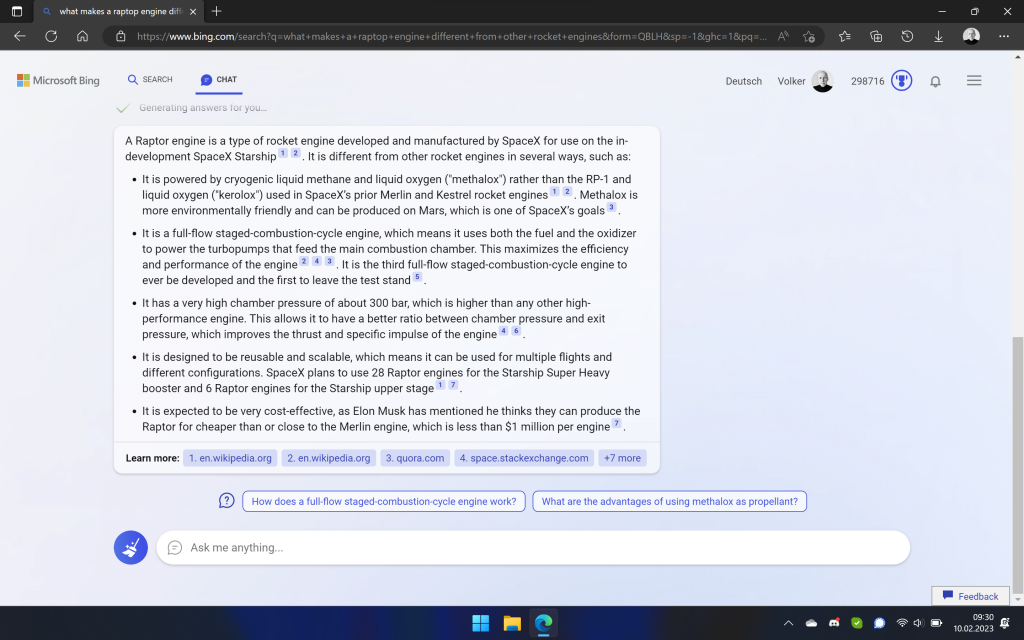

The results are not displayed in a split second, but the answer is typed out to you, as if you had a slow Internet connection. You just wait while Bing is doing its thing and you can start reading, although the result isn’t fully baked yet.

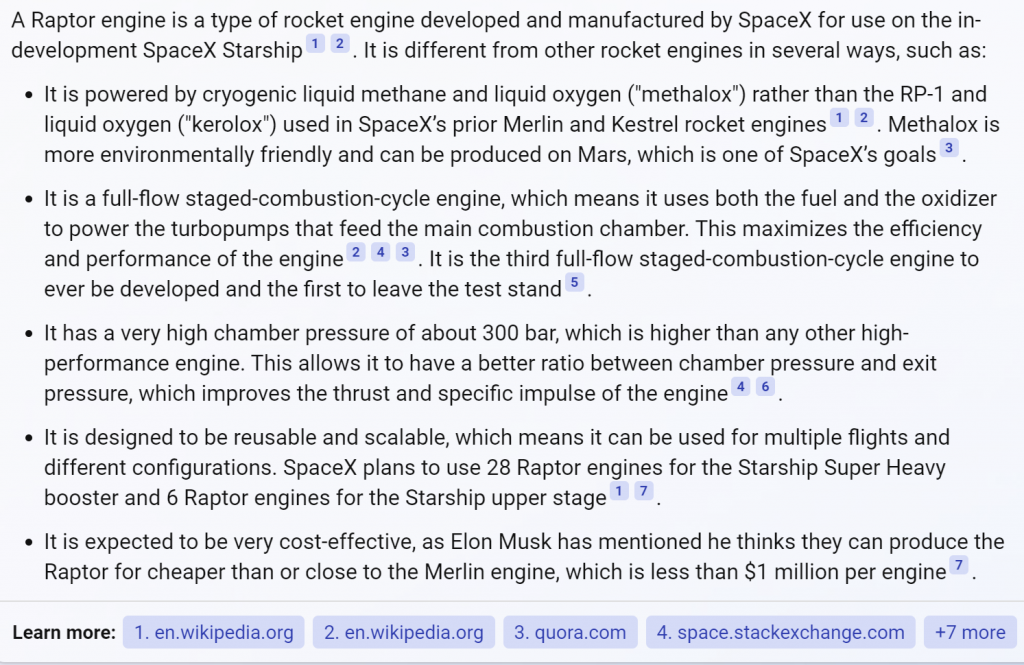

Zoomed in to make it more readable:

The result is quite impressive. You get a small essay that summarizes multiple sources, with links to these sources, and it suggests several follow up questions which you can just click, or you can just type in what crosses your mind.

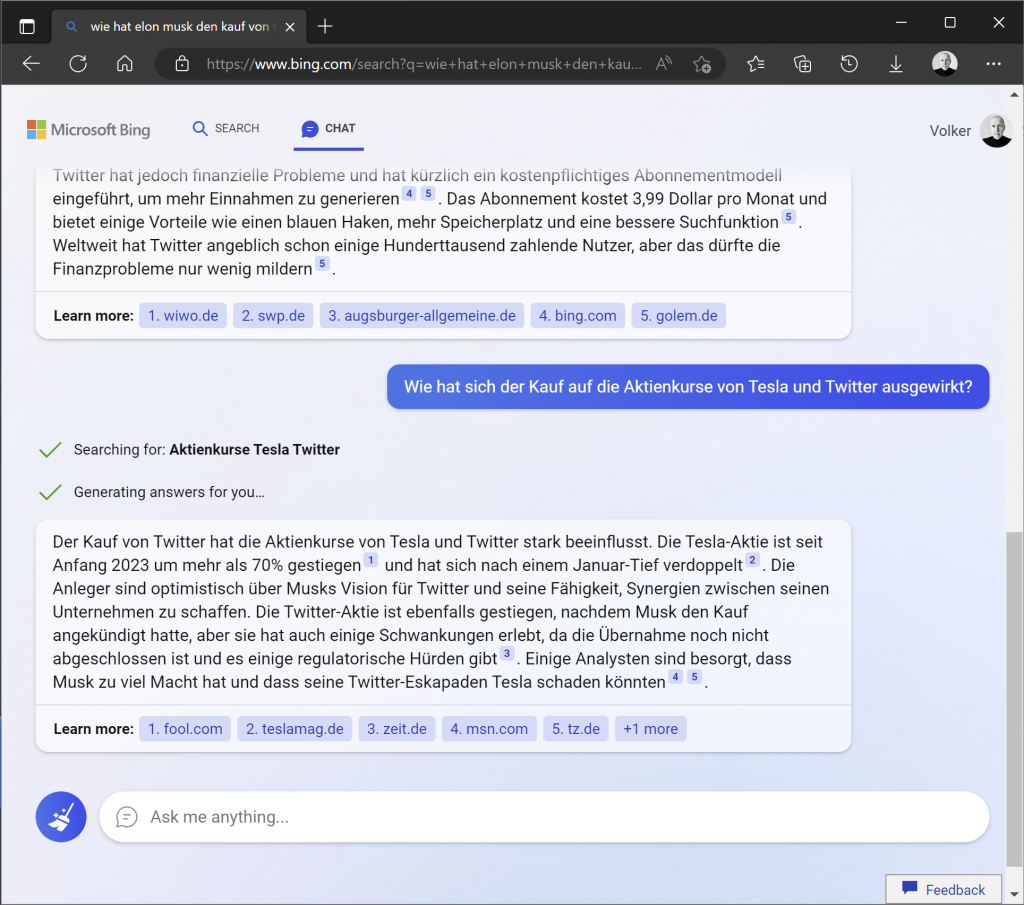

This does not only work in English but also in German and other languages. You can set the interface language, but you are not bound to this language. Bing answers questions in the language you type.

Bear in mind that this is a perfect bullshit generator that hallucinates occasionally, making up things as it goes. It’s only as good as its sources, but isn’t that true for all of us?

The danger lies in the form of a perfectly formed answer. It’s confident, but not necessarily right. A McKinsey consultant in a box.

I think I’ve read that they include search results in the response, something ChatGPT does not. Google certainly claims this for Bard.

Anyway, about typing out the answer for you. This is not a visual gimmick, but a consequence from how GPT-style models work. They predict “only” the next token. The original prompt is the basis for predicting the first token of the answer, and then the next token prediction is conditioned on the original prompt plus the first token that the model just generated, and then this goes on. Always including the previously generated tokens in the input of the next token prediction (auto-regressive).

Thank you Mariano, I just learned something new. 😃